Data Centers and the Next Wave of Distributed Generation

It is no secret that demand for electricity is surging. As discussed in a recent OnLocation report, a key driver of robust demand growth has been the explosion in construction of data centers, especially the “hyperscale facilities” that support cloud operations for major players such as Google and Microsoft. About 500 large data centers are now under construction or planned in the United States. These facilities consume enormous amounts of electricity, as illustrated by a few examples:

- Hallador Energy, owner of the 1080 MW coal-fired Merom plant in Indiana, is working to finalize an agreement to sell most of the plant’s output to an unnamed data center customer.

- A recently announced project would build 4,500 MW of gas-fired capacity on the site of the old Homer City coal plant in Pennsylvania to support data centers. The total cost of the project is reported at $10 billion for the data centers and generators. The project would be connected to the grid and would be one of the largest power plants in the United States.

- Even the Homer City project is dwarfed by the ambitions of OpenAI and its Stargate project, which initially is to entail building build up to 10 new data center projects, each about 1000 MW.

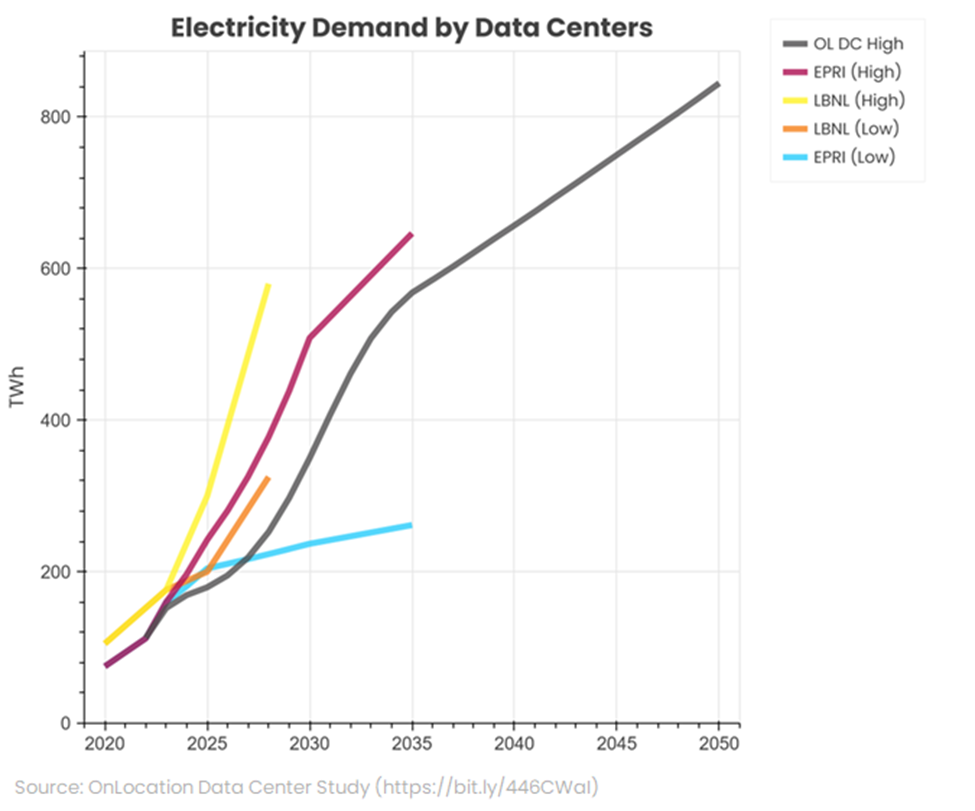

OnLocation is estimating data center demand will total more than 800 TWh annually by 2050 (Figure 1), equivalent to about 20 percent of all electricity sales in 2024. This growth will be turbocharged by AI applications, which require much more electricity than typical data center operations. By one estimate, a ChatGPT query uses about 2.9 watt-hours of power, almost 10 times more than a search on Google (0.3 watt-hours), and training the AI applications requires almost constant access to power. There are, of course, uncertainties in demand forecasts. For instance, the new Chinese DeepSeek AI system appears to be vastly more energy efficient than competitors. But whether lower energy costs will have a proportional impact on power usage or simply increase the demand for AI services (the so-called Jevons Paradox) is unclear.

In the highly competitive and rapidly growing data processing market, firms want to be able to build quickly. But power supply is proving to be a bottleneck. There are delays due to clogged interconnection queues and need for new transmission capacity. And as discussed below, there are also long waits for new gas-fired combustion turbines. According to OnLocation’s recent study, “Average construction timeline for power infrastructure and backup systems before 2020 was 1-3 years. Limited access to power means it can now take 2-6 years. Large data centers needing >100 MW of electricity wait up to 7 years to connect to the grid.” Consequently, the data center boom is leading to an unprecedented growth in large scale (one MW or greater) “distributed” generation; that is, generating capacity dedicated to a specific facility as described in the Homer City example above. The generator can be physically co-located with the consuming facility, but it can also be at another site and connected by local transmission lines. In contrast, building long-distance interstate transmission to serve data centers can be controversial, including claims that it provides a lifeline to coal plants that would otherwise be retired.

Distributed power is attractive to data center developers as a means of bypassing interconnection and transmission construction delays. Historically, distributed generation was most associated with relatively small-scale co-generation (that is, combined heat and power or CHP) plants at industrial and certain types of commercial sites, such as college campuses. The largest traditional CHP plant in the U.S. is the 1,854 MW Midland facility in Michigan, one of only four traditional distributed generators with a nameplate capacity of 1,000 MW or greater. In contrast, data centers can routinely require loads of 1000 MW or more. The U.S. Department of Energy, seeking to even further accelerate growth in data centers, has jumped into the game by offering 16 locations on federal property for the construction of data centers and accompanying power plants.

Another factor which differentiates this new generation of distributed power from its predecessors is that in some cases the facilities are not connected to the larger power grid. The benefits of “islanded” (also referred to as behind-the-meter or BTM) projects include shorter time to service as there is no need to wait for transmission facilities to be built, avoidance of utility transmission and demand charges, and escape from FERC regulation and the uncertainties of wholesale power market pricing and regulation. For example, LS Power is proposing to build 300 MW of new, BTM simple cycle combustion turbines near its Doswell plant in Virginia to directly serve planned nearby data centers. GE Vernova, a supplier of combustion turbines, has entered a joint venture aimed at supplying up to 4,000 MW of capacity to data centers, at least initially for islanded BTM applications

BTM facilities also avoid certain regulatory cost allocation issues. There is a risk that transmission facilities will be built but the data center projects are never completed, leaving the utility and its ratepayers holding the bag for project costs. This problem can perhaps be addressed with tariff design but a BTM project avoids the issue altogether. Some consumer advocates have argued that a large data center load that requires a utility to invest in transmission and to cover increased peak demand imposes costs on ratepayers who see few if any benefits from these investments. Meta, the parent of Facebook, is planning a $10 billion project in Louisiana with a load in excess of 2,000 MW. Scheduled to come on-line in 2030, the project will be supported by a $6 billion investment by Entergy Louisiana, including a 10,000-acre solar farm, three combustion turbines with 2,262 MW of capacity, and the addition of 100 miles of transmission lines. Entergy claims that customers will see no increase in costs, but consumer advocates are concerned that ratepayers will eventually pick up part of the tab for a massive investment targeted to support a single customer. Yet another example of regulatory complexities is Amazon’s on-going effort to receive additional dedicated capacity from the Susquehanna nuclear plant in Pennsylvania. FERC rejected the proposal because of cost allocation and reliability concerns with committing more of Susquehanna’s capacity to a single customer. A BTM arrangement with a data center connected to a new, off-the-grid generator would presumably avoid these kinds of complications.

However, there are also downsides. Most obviously, if the data center’s dedicated generator and any backup fails, the data center cannot rely on the larger power system for support. It is on its own. Islanded facilities also represent – at least arguably – a lost opportunity for the larger grid. In principle, data center generators can be used as a grid resource that can help meet peak demand and provide power if other grid resources fail. The Electric Power Research Institute (EPRI) is pursuing a “DC Flex” initiative to work with data center operators and utilities to test these concepts. Another variation is for data centers to reduce their load during peaks to lower the strain on grid resources. Google has been testing a demand response program in which the grid operator notifies Google when power supplies in a region will be tight. Google then can delay non-critical tasks and route some other tasks to data centers outside the region under stress.

A Duke University study suggests that up to 126 GW of new large additional customers could be supported by existing transmission infrastructure if large loads like data centers curtailed demand 0.5% from their planned maximum uptime. While this may sound minimal, data centers typically require 24/7 service with an uptime of 99.999% (about five minutes of downtime annually); this makes voluntary curtailments unattractive unless the facility has backup power or Google-like ability to shift processing to alternative facilities.

One question each data center with distributed generation must answer is which technology to use as a power source. Gas-fired simple or combined cycle turbines provide firm power, but the surge in national power demand has exceeded capacity to build combustion turbines and the wait time for getting a turbine from the factory can reportedly run more than five years. In contrast, by one estimate renewable power with battery support can be stood up in a year to 18 months. AEP is buying 1000 MW of fuel cells from Bloom Energy to help support data centers looking to rapidly begin operations.

The long lead times to install gas-fired capacity may also help to explain the interest data centers have shown in distributed nuclear power, in the form of Small Modular Reactors (SMRs). Carbon-free nuclear power also provides a hedge against future greenhouse gas regulation. As discussed in another OnLocation blog post, data center demand may help jumpstart a revival of American nuclear power.

As noted above, for further insight into the impact of data centers on power markets see our special report. Or contact OnLocation to tap into our wider energy, analytical, and modeling expertise.